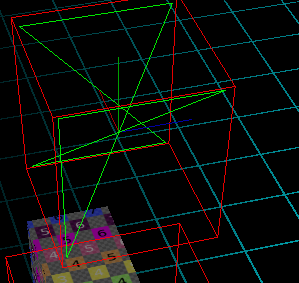

I saw some pattern in this bug.

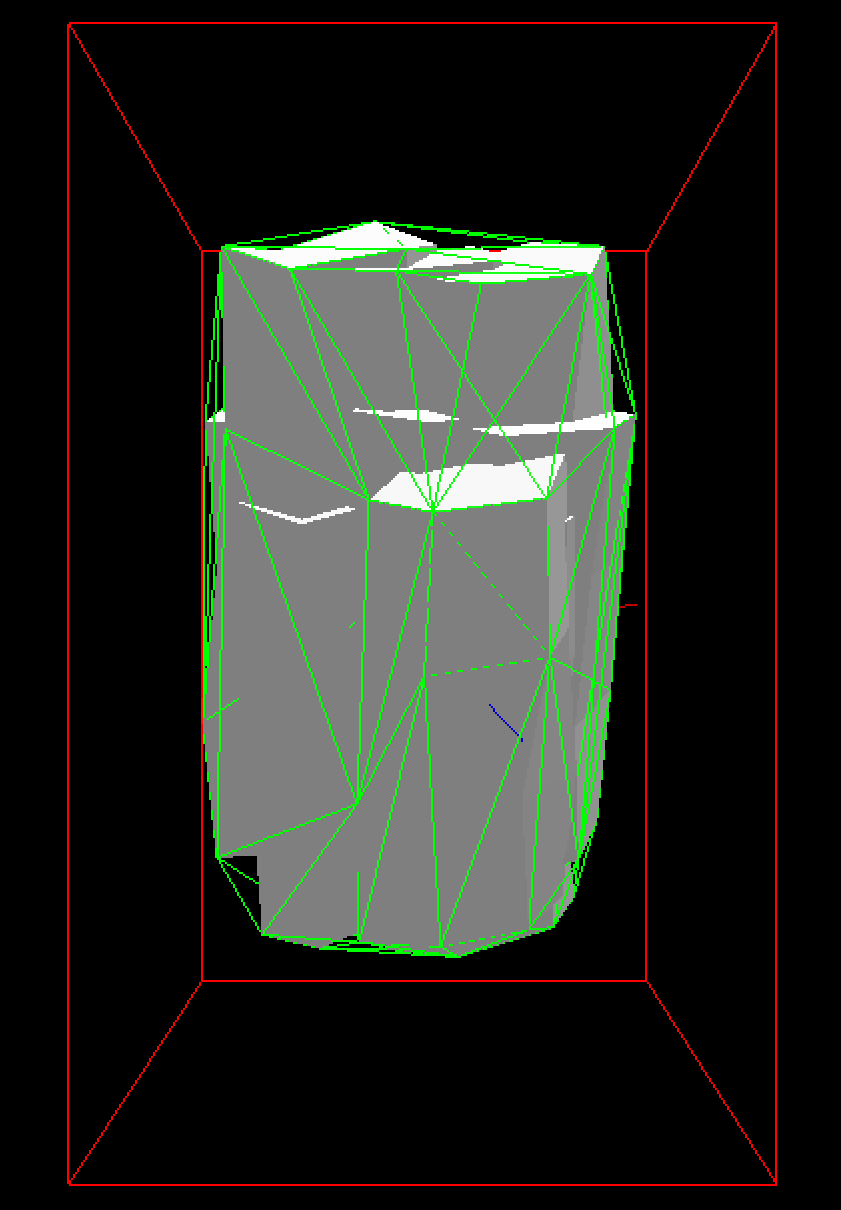

After creating the necessary and correct vertices, the unnecessary glitch vertices are created in a random progression. If the progression generated vertexes into the object, then physics works fine and doesn’t need to process glitch vertexes because they are inside the object. If the progression goes beyond the object, then glitch vertices can be very far from the object and physics can’t calculate so huge collisions and turned off the object with the overflow error.

May be the random factor is a bad memory access or a floating point stuff? For example, it can be out of bounds in vertices[i] that gets an undefined behavior with random vertex data.

We pass &convex_shape->m_Data[0] to NewConvexHullShape3D that waiting for an array of floats , is it correct? May be we need to pass just convex_shape->m_Data?

A switch with some 2D convex shape preparing:

if (context->m_3D)

resource->m_Shape3D = dmPhysics::NewConvexHullShape3D(context->m_Context3D, &convex_shape->m_Data[0], convex_shape->m_Data.m_Count / 3);

else

{

const uint32_t data_size = 2 * convex_shape->m_Data.m_Count / 3;

float* data_2d = new float[2 * convex_shape->m_Data.m_Count / 3];

for (uint32_t i = 0; i < data_size; ++i)

{

data_2d[i] = convex_shape->m_Data[i/2*3 + i%2];

}

resource->m_Shape2D = dmPhysics::NewPolygonShape2D(context->m_Context2D, data_2d, data_size/2);

delete [] data_2d;

}

3D shape creating:

HCollisionShape3D NewConvexHullShape3D(HContext3D context, const float* vertices, uint32_t vertex_count)

{

assert(sizeof(btScalar) == sizeof(float));

float scale = context->m_Scale;

const uint32_t elem_count = vertex_count * 3;

float* v = new float[elem_count];

for (uint32_t i = 0; i < elem_count; ++i)

{

v[i] = vertices[i] * scale;

}

btConvexHullShape* hull = new btConvexHullShape(v, vertex_count, sizeof(float) * 3);

delete [] v;

return hull;

}

2D shape creating:

HCollisionShape2D NewPolygonShape2D(HContext2D context, const float* vertices, uint32_t vertex_count)

{

b2PolygonShape* shape = new b2PolygonShape();

float scale = context->m_Scale;

const uint32_t elem_count = vertex_count * 2;

float* v = new float[elem_count];

for (uint32_t i = 0; i < elem_count; ++i)

{

v[i] = vertices[i] * scale;

}

shape->Set((b2Vec2*)v, vertex_count);

delete [] v;

return shape;

}

I also found an interesting recommendations post for working with btConvexHullShape:

- Use

.setMargin(0) to remove the bug with extra padding.

- Use

btShapeHull() to reduce the polygon count in btConvexHullShape.