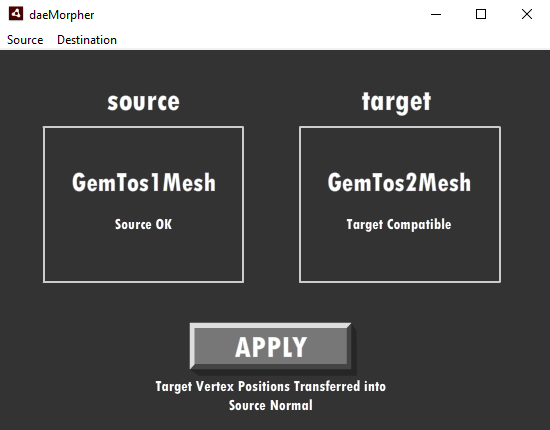

To do the morphing, I think you could squeeze in the positions of the second mesh into the normals of the first mesh, since you don’t seem to use the normals in the shading. You would need to do that as a pre-step with maybe a python script. I have been playing around with mesh generation for some gpu stuff lately, here is a simple python script that creates meshes in a grid-type shape:

import sys

template = """<?xml version="1.0" encoding="utf-8"?>

<COLLADA xmlns="http://www.collada.org/2005/11/COLLADASchema" version="1.4.1">

<asset><contributor><author></author><authoring_tool>FBX COLLADA exporter</authoring_tool><comments></comments></contributor><created>2012-10-10T12:34:45Z</created><keywords></keywords><modified>2012-10-10T12:34:45Z</modified><revision></revision><subject></subject><title></title><unit meter="1.000000" name="centimeter"></unit><up_axis>Y_UP</up_axis></asset>

<library_geometries>

<geometry id="pPlane1-lib" name="pPlane1Mesh">

<mesh>

<source id="pPlane1-POSITION">

<float_array id="pPlane1-POSITION-array" count="{vertex_elem_count}">

{positions}

</float_array>

<technique_common>

<accessor source="#pPlane1-POSITION-array" count="{vertex_count}" stride="3">

<param name="X" type="float"/>

<param name="Y" type="float"/>

<param name="Z" type="float"/>

</accessor>

</technique_common>

</source>

<source id="pPlane1-Normal0">

<float_array id="pPlane1-Normal0-array" count="{vertex_elem_count}">

{normals}

</float_array>

<technique_common>

<accessor source="#pPlane1-Normal0-array" count="{vertex_count}" stride="3">

<param name="X" type="float"/>

<param name="Y" type="float"/>

<param name="Z" type="float"/>

</accessor>

</technique_common>

</source>

<source id="pPlane1-UV0">

<float_array id="pPlane1-UV0-array" count="{uv_elem_count}">

{uvs}

</float_array>

<technique_common>

<accessor source="#pPlane1-UV0-array" count="{uv_count}" stride="2">

<param name="S" type="float"/>

<param name="T" type="float"/>

</accessor>

</technique_common>

</source>

<vertices id="pPlane1-VERTEX">

<input semantic="POSITION" source="#pPlane1-POSITION"/>

<input semantic="NORMAL" source="#pPlane1-Normal0"/>

</vertices>

<triangles count="{tri_count}" material="lambert1">

<input semantic="VERTEX" offset="0" source="#pPlane1-VERTEX"/>

<input semantic="TEXCOORD" offset="1" set="0" source="#pPlane1-UV0"/>

<p> {indices}</p></triangles>

</mesh>

</geometry>

</library_geometries>

<scene>

<instance_visual_scene url="#"></instance_visual_scene>

</scene>

</COLLADA>

"""

def flatten(x):

return [v for sub in x for v in sub]

def gen_model(width, height, filename):

quads = [[x, y] for x in range(width) for y in range(height)]

positions = ([[x, y, 0, (x+1), y, 0, x, (y+1), 0, (x+1), (y+1), 0] for x,y in quads])

positions = flatten(positions)

vertex_elem_count = len(positions)

vertex_count = vertex_elem_count / 3

tri_count = len(quads)*2

normals = ([[(x+0.5)/width, (y+0.5)/height, (x+(y*width))/float(width*height)] for x,y in quads for i in range(4)])

normals = flatten(normals)

uvs = [0.000000, 0.000000,

1.000000, 0.000000,

0.000000, 1.000000,

1.000000, 1.000000]

indices = [3, 2, 0, 3, 0, 1]

indices = [[(i + q*4), i] for q in range(len(quads)) for i in indices]

indices = flatten(indices)

with open(filename, "w") as f:

f.write(template.format(positions = " ".join(map(str, positions)),

normals = " ".join(map(str, normals)),

uvs = " ".join(map(str, uvs)),

vertex_elem_count = vertex_elem_count,

vertex_count = vertex_count,

uv_elem_count = str(8),

uv_count = str(4),

indices = " ".join(map(str, indices)),

tri_count = tri_count))

def main():

width = int(sys.argv[1])

height = int(sys.argv[2])

filename = "models/grid_{}_{}.dae".format(width, height)

gen_model(width, height, filename)

if __name__ == "__main__":

main()