Sorry it took so long to get back to you guys - been busy times…

So like @britzl and @roccosaienz are saying, it’s quite simple really. To begin with, anyway. I’ve begun working on a little tester project using Orthographic and have had some success.

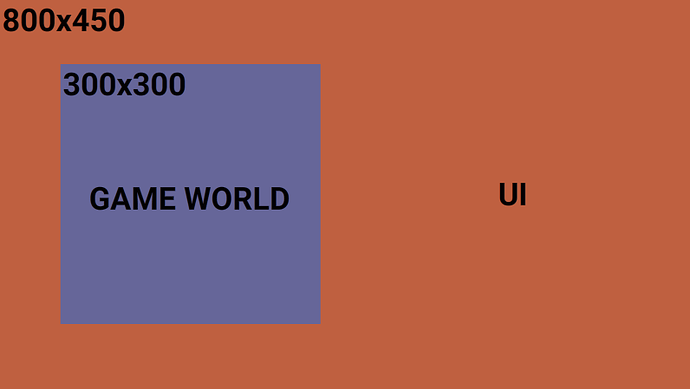

Here’s what it looks like with a normal viewport (note: line & mouse cursor are explained later):

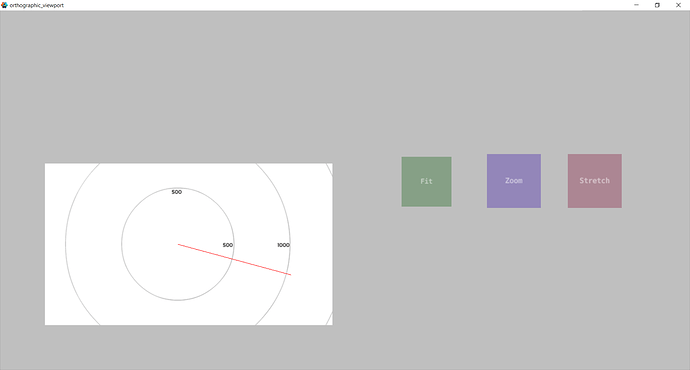

And here I’ve reduced the size of the viewport to a subset of the screen like I wanted:

As suggested above, all I’ve done is change the standard viewport:

render.set_viewport(0, 0, render.get_window_width(), render.get_window_height())

To something that has an offset and is half the width/height of the project:

render.set_viewport(100, 100, 740, 460)

After rendering the game world I set the projection back to normal for the GUI. All good so far.

The next step is to try and handle mouse input in this modified viewport. I draw a line from the middle of the “game” world to the cursor, which works fine in the normal project but not in the modified viewport.

(NOTE: My screen capture software must be showing my mouse in the wrong position in the video. The line is in fact drawn to cursor in the “normal” project, but is not for the version with the amended viewport.)

I think I need to be using screen_to_world (because it produces the right result in the normal project). The difference between screen/window never ceases to confuse me (same for action.x and action.screen_x).

Drilling into the orthographic module I see no reference to a viewport in the screen_to_world function:

--- Convert screen coordinates to world coordinates based

-- on a specific camera's view and projection

-- Screen coordinates are the scaled coordinates provided by action.x and action.y

-- in on_input()

-- @param camera_id

-- @param screen Screen coordinates as a vector3

-- @return World coordinates

-- http://webglfactory.blogspot.se/2011/05/how-to-convert-world-to-screen.html

function M.screen_to_world(camera_id, screen)

assert(camera_id, "You must provide a camera id")

assert(screen, "You must provide screen coordinates to convert")

local view = cameras[camera_id].view or MATRIX4

local projection = cameras[camera_id].projection or MATRIX4

return M.unproject(view, projection, vmath.vector3(screen))

end

Drilling deeper into M.unproject similarly doesn’t reference the viewport:

--- Translate screen coordinates to world coordinates given a

-- view and projection matrix

-- @param view View matrix

-- @param projection Projection matrix

-- @param screen Screen coordinates as a vector3

-- @return The mutated screen coordinates (ie the same v3 object)

-- translated to world coordinates

function M.unproject(view, projection, screen)

assert(view, "You must provide a view")

assert(projection, "You must provide a projection")

assert(screen, "You must provide screen coordinates to translate")

local inv = vmath.inv(projection * view)

screen.x, screen.y, screen.z = unproject_xyz(inv, screen.x, screen.y, screen.z)

return screen

end

Finally unproject_xyz:

local function unproject_xyz(inverse_view_projection, x, y, z)

x = (2 * x / DISPLAY_WIDTH) - 1

y = (2 * y / DISPLAY_HEIGHT) - 1

z = (2 * z)

local inv = inverse_view_projection

local x1 = x * inv.m00 + y * inv.m01 + z * inv.m02 + inv.m03

local y1 = x * inv.m10 + y * inv.m11 + z * inv.m12 + inv.m13

local z1 = x * inv.m20 + y * inv.m21 + z * inv.m22 + inv.m23

return x1, y1, z1

end

Here I’m thinking we’ve found something since it begins referencing display width/height. I’m guessing though since what I’m doing is non-standard, there’s no reason to handle viewport offsets and anything other than the display width/height (since the area covered by the camera is always going to match the display). Is that right?

So, what do I need to modify in order to enable Orthographic to handle a custom viewport a la @roccosaienz? That is:

render.set_viewport(left_corner, bottom_corner, viewport_width, viewport_height)

Thanks for any help!